The Uncanny Valley: Advances and Anxieties of AI That Mimics Life

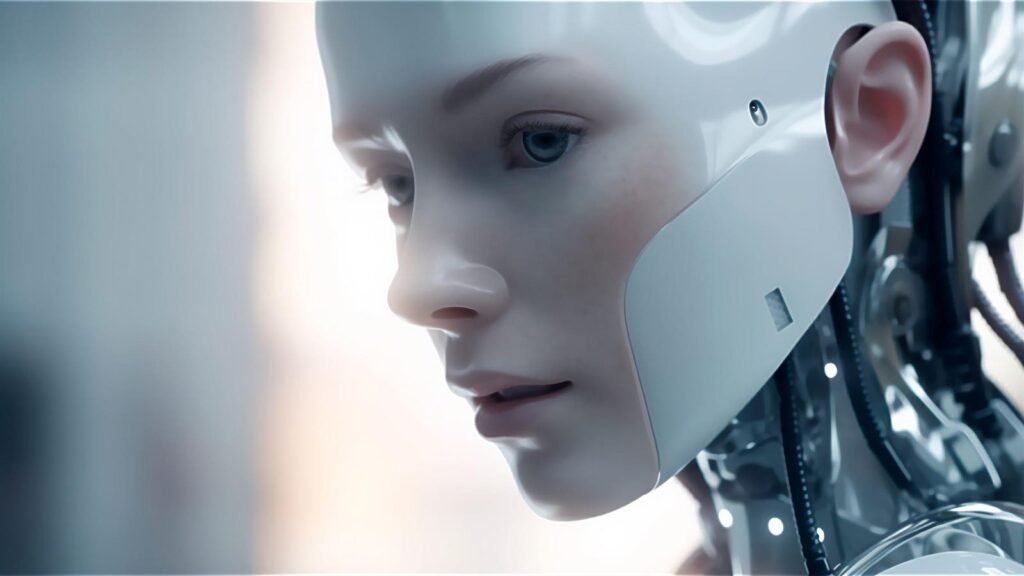

Seeing something that looks almost but not quite human can be a strange and frightening experience for some people. This phenomenon has been known for decades as the uncanny valley. In recent years, however, it has grown in relevance as realistic robots, film CG and digital avatars become features of everyday life.

The term comes from psychology and refers to the steep downward curve of a graph depicting a person’s emotional response to an object as it appears more and more human. This was discovered during experiments in the seventies that predicted that people would have a very negative emotional response to anything that looked very human – but not quite.

So, is it something we should be dealing with more often as technology in all its forms becomes more alive? And what problems is it likely to cause in a society where it becomes increasingly difficult to tell what is real and what is virtual?

AI And The Uncanny Valley

Robots that we can’t tell apart from humans may still be a long way off, although there have been some impressive advances in this area recently, including robots like Ameca which can display human emotions.

Computer-generated artificial people, however, have gotten to the point where it would sometimes be very difficult to know how real they are if we didn’t already know we were watching a movie or playing a game.

Given the speed at which technology is improving, it’s not hard to believe that we’ll soon have virtual reality simulations with much higher graphical fidelity than what we see today.

Perhaps most importantly, however, artificial intelligence (AI) is making it possible to create synthetic human faces, as well as voices, that are ever closer to being true to life.

Ultimately, this means that as time goes on, we are likely to experience the uncanny valley effect more often.

Consequences and challenges

So why is this a problem?

Well, we’re still unclear about the impact that increasing exposure to realistic, simulated humans and human intelligence will have on our mental health.

For some, the uncanny valley is already creating heightened emotional distress and anxiety. If this escalates as more of us are exposed, it could lead to a widespread collapse of trust in technology. For those of us who believe that AI has enormous potential to do good in the world, that would be a shame.

From the perspective of those who build the technology, anyone who spends millions or billions of dollars to design and build AI robots and humans probably doesn’t want us to feel pushed out by them.

Many of the upcoming use cases for AI include applications where it should take stress and anxiety out of the situation rather than adding it, such as healthcare or customer service applications.

So the challenge will involve balancing functionality – the ability to provide the service we expect from a robot or artificial intelligence – with a form that avoids evoking the eerie sensations we’re discussing here.

Conquering the Uncanny Valley

The challenge of creating “acceptable” robots and artificial intelligence is as much a psychological and social problem as a technical one.

Overcoming it will likely require collaboration between engineers and people with “soft” human skills in languages, psychology and emotional intelligence.

In AI robots, digital humans, and avatars, it will involve applying the subtle, individualistic behaviors we use to communicate qualities like trust and compassion during social interactions.

As impressive as they are at times, this is still largely absent from the current generation of synthetic human technology.

Person-to-person interaction is extremely complex and nuanced. Everything from body language to tone of voice and our experience of a person affects how we perceive and react to our fellow human beings. Robotics engineers and creators of digital humans are still only scratching the surface when it comes to simulating this.

When we get there, we’ll likely end up with algorithms that learn (rather than be programmed) to read and respond to human behaviors in real time, automatically adjusting their actions to match the context of a situation.

Progress has already been made in this area – emotional AI refers to machines that attempt to detect and interpret human emotional signals and react appropriately.

Another possibility is that our understanding of the nature of artificial intelligence is evolving, and we are beginning to see it as a tool to augment our abilities rather than simply simulate them.

If this is the case, we might be less concerned with building systems that “seem” human to us and more focused on those that can do things we cannot.

However, until this situation is resolved, we can be thankful that the uncanny valley phenomenon gives us yet another reason to stop and think about what makes us human.

When the lines between the real and the virtual, and the artificial and the ‘natural’, become increasingly blurred, highlighting the differences helps us understand the strengths and weaknesses of both.